Red Hat OpenShift is a Kubernetes-based platform for container orchestration. OpenShift differentiates itself from Kubernetes through features such as tight integration with Red Hat Linux, long-term enterprise support, platform as a service (PaaS), and more.

Whether you’re using OpenShift or Kubernetes for management of your infrastructure, there are some general security limitations of containerized environments that you need to keep in mind. At the heart of all identity security concerns is the Secret Zero problem — reliance on a master key or “secret zero” that can unlock all other credentials, giving potential attackers access to everything in the organization

With container orchestration, Docker files and Helm charts become potential vectors for revealing secrets, or sharing identities, roles, and authorization too broadly. Encryption of secrets, separation of duties and other policies, allowing easy management of resources without restricting developer access, secret rotation, and centralized auditing are all important features of containerized infrastructure.

One of the key differences between OpenShift and Kubernetes is OpenShift’s default stance toward platform security. For example, OpenShift uses security context constraints (SCCs) for limiting and securing the cluster, defining six SCCs that default to restricted access.

CyberArk Conjur enhances the default security stance of OpenShift by managing and rotating secrets and other credentials, and by securely passing secrets stored in Conjur to applications running in OpenShift.

In this article, we are going to show you how to set up Conjur in a typical OpenShift environment – fast and efficiently. You’ll see how to store passwords outside the repository while keeping them accessible to applications inside OpenShift. It’s a rather practical article. If you’re interested in reading up on the theory of it, check out the Conjur blog.

Setting Up OpenShift

Like it’s sister orchestrator Kubernetes, OpenShift is available on most cloud platforms, so it’s easy to install and get your own hands-on experience. For this example, we’ll use Google Cloud, but AWS and Azure offer a very similar experience.

To install it on Google Cloud, you just need a Google Cloud account and a project. We’ll use the gcloud command line tool to create an OpenShift project.

$ gcloud init $ gcloud projects create openshift-conjur --name "OpenShift and Conjur" $ gcloud config set project openshift-conjur $ gcloud config list

Note that the project ID (“openshift-conjur”) should be unique, so use your own naming.

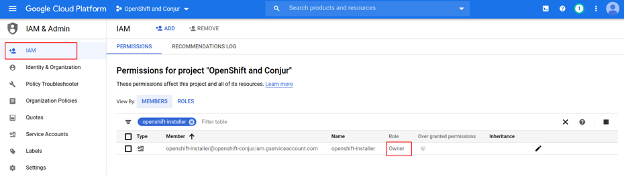

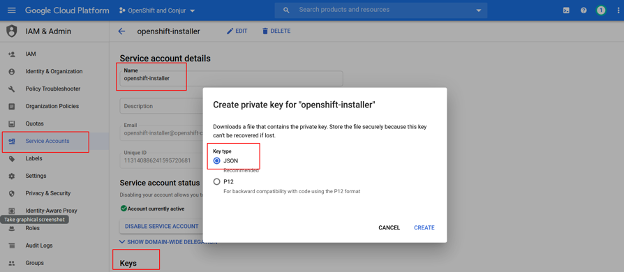

The OpenShift installer creates a cluster in Google Cloud on behalf of the user. For ease of installation, I will give the user Owner rights. In production, you’ll need to assign the rights more accurately. Now we just create the necessary Service Account and store its key locally as an openshift-installer.json file:

OpenShift will need to make requests to some Google Cloud APIs, so you’ll need to allow them for:

- cloudresourcemanager

- dns

- compute

- iam

The commands look like this, as you probably already know:

$ gcloud services enable cloudresourcemanager.googleapis.com --project openshift-conjur

After installation, OpenShift should be accessible by domain name. This name can be registered with any registrar of your choice. For the examples I’ll use the subdomain ocp.example.com and its resolution will delegate to nameservers from Google Cloud.

Create a managed zone first:

$ gcloud dns managed-zones create ocp --dns-name ocp.example.com --description "OpenShift and Conjur" $ gcloud dns managed-zones describe ocp ... nameServers: - ns-cloud-e1.googledomains.com. - ns-cloud-e2.googledomains.com. - ns-cloud-e3.googledomains.com. - ns-cloud-e4.googledomains.com. ...

The nameservers should be added to the DNS Management section of your domain registrar:

Next, go to the OpenShift website and create a cluster. You can try it for free by going to https://www.openshift.com/tryand clicking Create your own cluster, then Try it in the cloud.

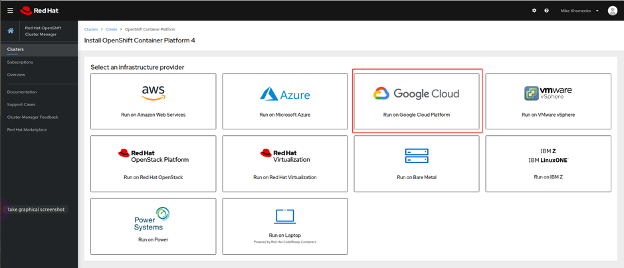

If you are not logged in, enter your credentials or create a Red Hat account. After a successful login, you will see a page where various options for the OpenShift deployment are described. I’m using Google Cloud:

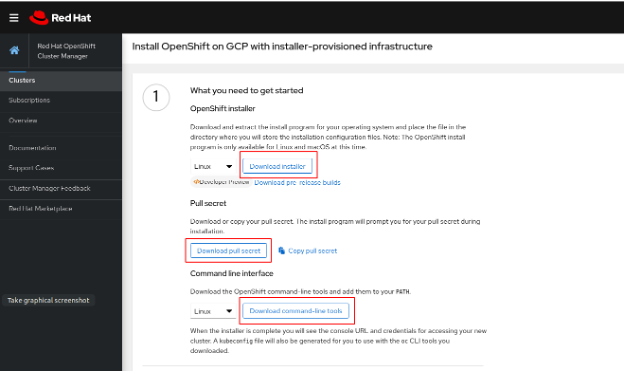

Then you’ll have the choice to either rely on the installer’s default settings or to specify a number of settings yourself. Select, as recommended, Installer-provisioned infrastructure.

Download the installer itself, a secret (used when Docker-images from the OpenShift repository are downloaded to an OpenShift cluster), and two console utilities for connecting to the cluster: oc and kubectl.

After downloading, unzip and run the installer. As the installer runs, files may be generated. You can specify a directory for them so as not to litter the current directory:

$ ./openshift-install create cluster –dir=./gcp

You can optionally add debug output to the ./openshift-install command:

./openshift-install create cluster –dir=./gcp --log-level=debug

You’ll need to set up some parameters for the cluster:

| Parameter | Value | Comment |

| SSH Public Key | Path to ssh key | You can generate an SSH key and put it here. It may be useful for gathering logs in case any errors occur. |

| Platform | gcp | |

| Service Account | Absolute path to openshift-installer.json | We created such a key when we created the Service Account. |

| Project ID | openshift-conjur | Your project name. |

| Region | europe-west2 | Choose an appropriate region. |

| Base Domain | ocp.example.com | Your domain name. |

| Cluster Name | ocp-test | Use an appropriate name. |

| Pull Secret | Content of file pull-secret.txt | We initially downloaded this from the OpenShift website. |

If you want to understand the installation process in more detail, the official installation documentation deserves attention. You can start reading from Configuring a GCP project.

Note that the default disk requirements in OpenShift won’t work with the free tier on Google Cloud and may need to be adjusted for other platforms. You may also need to configure CPU usage quotas. You can adjust the allotted resources on your cloud platform of choice, or you can configure the resources to be used by OpenShift in the install-config.yaml file.

In this excerpt, I adjusted the disk usage to 50GB and explicitly set the instance type.

compute:

- architecture: amd64

hyperthreading: Enabled

name: worker

platform:

gcp:

osDisk:

DiskSizeGB: 50

DiskType: pd-ssd

type: n1-standard-2

zones:

- europe-west2-a

- europe-west2-b

- europe-west2-c

replicas: 3

controlPlane:

architecture: amd64

hyperthreading: Enabled

name: master

platform:

gcp:

osDisk:

DiskSizeGB: 50

DiskType: pd-ssd

type: n1-standard-4

zones:

- europe-west2-a

- europe-west2-b

- europe-west2-c

replicas: 3

Wait for the installer to finish its job. When it’s done, an OpenShift UI URL will be provided as well as a command to configure kubectl.

INFO Install complete! INFO To access the cluster as the system:admin user when using 'oc', run 'export KUBECONFIG=/<path>/gcp/auth/kubeconfig' INFO Access the OpenShift web-console here: https://console-openshift-console.apps.ocp-test.ocp.example.com INFO Login to the console with user: "kubeadmin", and password: "XyZO-ABCD-eFgH-ijKL"

Run the export command as recommended, then unzip openshift-cli and copy the oc and kubectl utilities to a standard location for custom executables (usually /usr/local/bin):

Check the availability of the OpenShift cluster:

$ oc get nodes NAME STATUS ROLES AGE VERSION ocp-test-klsz4-master-0 Ready master 69m v1.18.3+6025c28 ocp-test-klsz4-master-1 Ready master 69m v1.18.3+6025c28 ocp-test-klsz4-master-2 Ready master 69m v1.18.3+6025c28 Ocp-test-klsz4-worker-a-pbhrn Ready worker 57m v1.18.3+6025c28 ocp-test-klsz4-worker-b-8xrr5 Ready worker 57m v1.18.3+6025c28 ocp-test-klsz4-worker-c-cmxvn Ready worker 57m v1.18.3+6025c28

Now the OpenShift cluster is set up and we can install Conjur.

Setting Up CyberArk Conjur

Conjur is a way to store sensitive data (secrets) in an external place, rather than inside an application or its repository. Conjur also provides a way to retrieve that data inside an application on demand.

We’re going to use an open-source Conjur version. There is also an Enterprise version called Application Access Manager.

The standard way to deploy any application to Kubernetes/OpenShift is to use Helm. Conjur already has a pre-configured Helm Chart, making this a straightforward task. We’ll use Helm version 3 as it doesn’t require installation on the OpenShift side. You can find the Conjur OSS Helm Chart repo on GitHub.

$ helm version --short v3.0.1+g7c22ef9 $ helm repo add cyberark https://cyberark.github.io/helm-charts $ helm repo update $ helm search repo conjur NAME CHART VERSION APP VERSION DESCRIPTION cyberark/conjur-oss 2.0.0 A Helm chart for CyberArk Conjur

For this demo, we’ll choose a simple installation where both Conjur and PostgreSQL, which it uses as the default database, are installed together in the OpenShift cluster. All data in Conjur databases is encrypted. The encryption key is generated before installation:

$ DATA_KEY=$(docker run --rm cyberark/conjur data-key generate)

$ helm upgrade conjur cyberark/conjur-oss --install --set dataKey="${DATA_KEY}" --set authenticators="authn\,authn-k8s/demo" --version 2.0.0 --namespace default

Besides dataKey, here we set two ways to authenticate clients in Conjur:

- authn for standard authentication (where login/API key are used)

- authn-k8s for pods (where the certificate is used for pod authentication).

You can read about supported authentication types if you would like to know more.

Once Conjur is running, initialize the built-in admin user and create a default account for further usage. We can do it in one command:

$ oc exec -it conjur-conjur-oss-6466669767-zbk6n -c conjur-oss -- conjurctl account create default

Save the admin API key locally. You’ll need it later.

You’ll also need a Conjur service endpoint:

$ oc get svc conjur-conjur-oss-ingress -ojsonpath='{.status.loadBalancer.ingress[0].ip}'

34.105.142.103

Your IP will differ. I’ll use <EXTERNAL_IP> from this point on to show where to insert your own IP.

With the Conjur server running, you can connect to it. For that we use a command line tool called conjur-cli. Run the conjur-cli Docker version locally:

$ docker run --rm -it cyberark/conjur-cli:5 # conjur init --url=https://<EXTERNAL_IP> --account=default Trust this certificate (yes/no): yes Wrote certificate to /root/conjur-default.pem Wrote configuration to /root/.conjurrc

Note that the server certificate is written to /root/conjur-default.pem, and the default domain — conjur.myorg.com — is used as Common Name. You should change this by either setting the ssl.hostnameparameter during Conjur OSS Helm release deployment or by changing the host file in the Docker container.

Simply configure the Conjur server IP <EXTERNAL_IP> to correspond to the domain:

# echo "<EXTERNAL_IP> conjur.myorg.com" >> /etc/hosts # conjur init --url=https://conjur.myorg.com --account=default

Also, save the generated certificate (the file /root/conjur-default.pem) from the container to your local machine for later usage. I will refer to this file as <CERTFICATE_FILE>.

# conjur authn login -u admin Logged in

Managing Secrets with Conjur

We’re ready to create a secret that will be used later by the application. We’ll first declare what application has access to this secret. Such a declaration is done in the policy file, which should be loaded into the Conjur server. Policy files are just declarations of different resources, like users, groups of users, hosts (non-human Conjur clients), layers (groups of hosts), and so on.

A policy example can be found on GitHub. The official “Get Started” guide is quite useful.

Let’s directly try a sample policy. Recall that we run commands inside the Docker conjur-cli container. Here’s an example of a policy:

- !policy

id: BotApp

body:

- !user Dave

- !host myDemoApp

- !variable secretVar

- !permit

role: !user Dave

privileges: [read, update, execute]

resource: !variable secretVar

- !permit

role: !host myDemoApp

privileges: [read, execute]

resource: !variable secretVar

Load this policy into the Conjur server:

# conjur policy load root policy.yaml

Store the output somewhere. You’ll need the value of api_key for the application. (For the sample we’ll assume its host is BotApp/myDemoApp and refer to its key as <BotApp API key>.)

Here we check the loaded policy:

# conjur list [ "default:policy:root", "default:policy:BotApp", "default:user:Dave@BotApp", "default:host:BotApp/myDemoApp", "default:variable:BotApp/secretVar" ]

Now we can create a secret called secretVar with the value “qwerty12”:

# conjur variable values add BotApp/secretVar qwerty12

Let’s see how to retrieve this secret value from an application.

Running an Application Manually

We’ll use Ubuntu as the application pod. Let’s define the Ubuntu pod on a local machine (not from the conjur-clicontainer) and deploy it into OpenShift. Here’s the content of ubuntu.yaml to configure the pod:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ubuntu

namespace: demo

spec:

replicas: 1

selector:

matchLabels:

app: ubuntu

template:

metadata:

labels:

app: ubuntu

spec:

containers:

- name: ubuntu

image: ubuntu:18.04

command: ["/bin/bash", "-c", "tail -f /dev/null"]

Now we’ll deploy the pod using this configuration:

$ oc create namespace demo $ oc adm policy add-scc-to-user anyuid -z default -n demo # Allow root in Ubuntu pod $ oc apply -f ubuntu.yaml $ oc -n demo get po NAME READY STATUS RESTARTS AGE ubuntu-65d559b86-9lnf5 1/1 Running 0 5s $ oc -n demo exec -it ubuntu-65d559b86-9lnf5 -- bash # apt update # apt install -y curl

Let’s receive the access token by calling the Conjur server endpoint with the <BotApp API key> provided:

# curl -d "<BotApp API key>" -k https://conjur-conjur-oss.default.svc.cluster.local/authn/default/host%2FBotApp%2FmyDemoApp/authenticate > /tmp/conjur_token

Put the access token in the file /tmp/conjur_token.

# CONT_SESSION_TOKEN=$(cat /tmp/conjur_token| base64 | tr -d '\r\n') # curl -s -k -H "Content-Type: application/json" -H "Authorization: Token token=\"$CONT_SESSION_TOKEN\"" https://conjur-conjur-oss.default.svc.cluster.local/secrets/default/variable/BotApp%2FsecretVar qwerty12

That’s the manual process to have an application retrieve a secret.

Automated Token Retrieval with OpenShift

For applications deployed in OpenShift, the access token retrieval process can be automated.

I’ll describe the Kubernetes Authenticator Client, which is one of three methods to integrate an application and Conjur server on the OpenShift platform. See Kubernetes Authenticator Client and Kubernetes Authentication with Conjur for more information.

In short, a special auth container is deployed in the same pod as your application. This container uses a certificate created for the default account (remember the conjur init command and the certificate file?) and its OpenShift name (namespace, container name, Service Account) to authenticate in the Conjur server.

After successful authentication, it retrieves the access token and puts it into a directory shared with the application container. The application can simply use this token file in its API calls to the Conjur server.

Let’s do it. Conjur recommends storing the certificate as a ConfigMap.

$ oc -n demo create configmap conjur-cert --from-file=ssl-certificate=<CERTIFICATE_FILE>

Continue by adding an ubuntu-auth container to an Ubuntu pod (taken as an example application) and running the application on behalf of the demo-app Service Account:

$ oc adm policy add-scc-to-user anyuid -z demo-app -n demo

And here’s an example ubuntu.yaml configuration:

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: demo-app

namespace: demo

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ubuntu

namespace: demo

spec:

replicas: 1

selector:

matchLabels:

app: ubuntu

template:

metadata:

labels:

app: ubuntu

spec:

containers:

- name: ubuntu

image: ubuntu:18.04

command: ["/bin/bash", "-c", "tail -f /dev/null"]

env:

- name: CONJUR_APPLIANCE_URL

value: "https://conjur-conjur-oss.default.svc.cluster.local/authn-k8s/demo"

- name: CONJUR_ACCOUNT

value: default

- name: CONJUR_AUTHN_TOKEN_FILE

value: /run/conjur/access-token

- name: CONJUR_SSL_CERTIFICATE

valueFrom:

configMapKeyRef:

name: conjur-cert

key: ssl-certificate

volumeMounts:

- mountPath: /run/conjur

name: conjur-access-token

readOnly: true

- name: ubuntu-auth

image: cyberark/conjur-authn-k8s-client

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: MY_POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: CONJUR_AUTHN_URL

value: "https://conjur-conjur-oss.default.svc.cluster.local/authn-k8s/demo"

- name: CONJUR_ACCOUNT

value: default

- name: CONJUR_AUTHN_LOGIN

value: "host/conjur/authn-k8s/demo/demo/service_account/demo-app"

- name: CONJUR_SSL_CERTIFICATE

valueFrom:

configMapKeyRef:

name: conjur-cert

key: ssl-certificate

volumeMounts:

- mountPath: /run/conjur

name: conjur-access-token

serviceAccountName: demo-app

volumes:

- name: conjur-access-token

emptyDir:

medium: Memory

Now we apply the configuration:

$ oc apply -f ubuntu.yaml

To make this example work we should also add a corresponding policy.

Note that writing policy correctly requires some experience. It’s useful to know how to enable and disable debugging on the Conjur server (in both cases pod will be restarted):

Here’s an example of enabling:

$ oc set env deployment/conjur-conjur-oss -c conjur-oss CONJUR_LOG_LEVEL=debug

And here’s an example of disabling:

$ oc set env deployment/conjur-conjur-oss -c conjur-oss CONJUR_LOG_LEVEL-

During debugging session you may find errors in Conjur server logs like:

Authentication Error: #<Errors::Authentication::Security::WebserviceNotFound: CONJ00005E Webservice 'demo' not found>

This means that we need to create a webservice in Conjur policy.

You might find an error like this:

Host id default:host:conjur/authn-k8s/demo/demo/service_account/demo-app extracted from CSR common name Authentication Error: #<NoMethodError: undefined method `RoleNotFound' for Errors::Authentication::Security:Module>

This means we lack a host declaration for our application.

Authentication Error: #<Errors::Authentication::Security::RoleNotAuthorizedOnWebservice: CONJ00006E 'default:host:conjur/authn-k8s/demo/demo/service_account/demo-app' does not have 'authenticate' privilege on demo>

And that one obviously says we don’t have authentication privileges.

Below I provide the resulting policy configuration that should be loaded by the comand:

# conjur policy load root app.yaml

Note: We also added certificate variables here. Read more about how we generated their values on the Enable Authenticators for Applications page.

After applying this policy, we should run the bash script provided on that page, replacing <AUTHENTICATOR_ID> with “demo” and <CONJUR_ACCOUNT> with “default”.

# cat app.yaml

- !policy

id: conjur/authn-k8s/demo

body:

- !webservice

- !layer

id: demo-app

- !host

id: demo/service_account/demo-app

annotations:

authn-k8s/namespace: demo

authn-k8s/authentication-container-name: ubuntu-auth

- !grant

role: !layer demo-app

member: !host demo/service_account/demo-app

- !permit

resource: !webservice

privilege: [ authenticate ]

role: !layer demo-app

# CA cert and key for creating client certificates

- !policy

id: ca

body:

- !variable

id: cert

annotations:

description: CA cert for Kubernetes Pods.

- !variable

id: key

annotations:

description: CA key for Kubernetes Pods.

- !variable test

- !permit

role: !layer demo-app

privileges: [ read, execute ]

resource: !variable test

After loading the policy, the authenticator will be able to successfully retrieve the access token from the Conjur server and put it into the /run/conjur/access-token file. Everything is ready to just use this token. You can create a variable to try it out:

# conjur policy load root app.yaml # conjur variable values add conjur/authn-k8s/demo/test 1234567890

Finally, let’s put it to the test:

$ oc -n demo exec -it ubuntu-64877b4d84-jw6r4 -c ubuntu -- bash # apt update && apt install -y curl # CONT_SESSION_TOKEN=$(cat /run/conjur/access-token| base64 | tr -d '\r\n') # curl -s -k -H "Content-Type: application/json" -H "Authorization: Token token=\"$CONT_SESSION_TOKEN\"" https://conjur-conjur-oss-ingress.default.svc.cluster.local/secrets/default/variable/conjur%2Fauthn-k8s%2Fdemo%2Ftest 1234567890

Cool! The Ubuntu application can now use this secret variable without any credentials. All the hard work is done behind the scene by Conjur applications. We can simply use the results.

Next Steps

In this article, we showed you how to set up CyberArk Conjur on the OpenShift platform. The resulting OpenShift/Conjur solution helps secure your applications by making sure secrets are provided by the secure vault, not floating around in your application configuration.

Try it yourself. Download Conjur and add an extra layer of protection for your OpenShift environment by managing secrets effectively.

If you have any questions or doubts, get in touch with our support or request a custom demo.

John Walsh has served the realm as a lord security developer, product manager and open source community manager for more than 15 years, working on cybersecurity products such as Conjur, LDAP, Firewall, JAVA Cyptography, SSH, and PrivX. He has a wife, two kids, and a small patch of land in the greater Boston area, which makes him ineligible to take the black and join the Knight’s Watch, but he’s still an experienced cybersecurity professional and developer.